Fine-Grained RBAC For GitHub Action Workflows With GitHub OIDC and HashiCorp Vault

Senior Manager, Product Security

Share

Try DigitalOcean for free

Click below to sign up and get $200 of credit to try our products over 60 days!Sign upIn our last article, we discussed how a developer-first, contextual approach to secrets management enables a security program to meet the speed and scale of modern businesses. In this article, we explain how we accomplished this by leveraging GitHub’s OpenID Connect (OIDC) support for authentication to fine-grained Hashicorp Vault roles, resulting in a “credentials-free” experience for development teams in our deployment pipelines. For organizations running CI/CD through GitHub Actions and managing their secrets with HashiCorp Vault, we’ve found a process that offers a streamlined experience for developers while enabling simplified orchestration and management for security.

In this article, we step through the technical implementation we employed between GitHub and Vault to support this OIDC flow for secrets consumption. We cover both the programmatic components of this secrets management pattern and the engineering details other organizations may wish to adapt to create their own versions of this program. At the end of the article, we share a Terraform module we have open sourced to help any organization to get up and running with a similar initiative.

The “Secret Zero” Problem

A common concern with many secrets management efforts is the “secret zero” problem. An organization stores all of its secrets in some kind of protected enclave, such as HashiCorp Vault or 1Password. The organization needs to restrict access to the secrets and to segment which secrets each group can access. Therefore, roles are created and authentication to those roles are distributed to appropriate users, teams, and systems.

But where can those authentication credentials be safely stored? Not in the secret store, as these credentials are a precondition to getting access. Could they be stored in a separate protected enclave? But how is access to that system protected? It’s turtles all the way down. This first set of login credentials used to gain access to the secrets store is often denoted “secret zero.”

Current Alternatives Require Complex Management

One common solution to the “secret zero” problem is to introduce another secret enclave that contains an already trusted entity at the point where access to the secrets store is needed. For example, if a company’s secrets are stored in HashiCorp Vault, static long-lived credentials to Vault (e.g. userpass, AppRole) can be generated and stored in GitHub as encrypted secrets. A repository is granted access to these secrets as a property of the access control settings set on the repository or organization available in GitHub. Depending on the operating environment and the company in question, this may be sufficient to allow a GitHub Action workflow secure access to secrets in Vault.

For many organizations, however, this approach necessitates implementing complex management procedures. An organization should be able to produce the following information about their secrets management program:

-

A mapping of which authentication roles are used by which repositories

-

The secrets accessible to each team’s projects

-

Whether a team’s access is too broad or too restrictive

-

Demonstrate adherence to specific compliance requirements

GitHub secrets do not currently provide capabilities to enable a company to produce this information. A Vault role with static credentials may be created for a particular use case, but an organization cannot natively confirm it has not been stored in a second repository’s secrets and leveraged for an unintended use case.

Moreover, these credentials are all static and long-lived, posing a risk to the organization if the deployment workflow is compromised. Stolen credentials continue to be a significant factor in breach incidents. All of a sudden an organization must build a sprawling asset management system for its secret store login credentials that is likely perpetually out of date, build a homegrown credential rotation and auditing lifecycle, or entirely give up on understanding these relationships and accept the risk this lack of visibility poses. This approach is certainly better than plaintext exposure! But GitHub OIDC offers a better solution.

GitHub OIDC

GitHub launched OIDC support within GitHub Actions in October 2021 to enable cloud deployment workflows to authenticate to their services without needing to handle credentials inside the GitHub repo. From their roadmap issue: “OpenID token exchange eliminates the need for storing any long-lived cloud secrets in GitHub.” By using OIDC authentication to Vault, we remove the need for engineers to manage a root credential pair and solve the “secret zero” problem for these workloads!

Discussing OAuth2 and OpenID Connect are outside the scope of this article, but this introduction to OAuth and OIDC from Okta serves as a helpful visual explainer. At a high level, OIDC is a way to authenticate a user or service to a third party identity provider (IdP) using a JSON Web Token (JWT). Instead of managing login credentials, the token exposes parameters (known as claims) which we can bind a Vault role against. When GitHub presents a token containing the necessary combination of claims, Vault will return an auth token for a given Vault role.

Fine-Grained CI/CD Roles

Beyond solving the “secret zero” problem, using GitHub OIDC for authentication provides greater flexibility to fine-tune least-privilege access to roles. For example, beyond simply delineating between repositories inside an organization, GitHub OIDC auth allows us to bind specific workflows inside a repository to different Vault roles in an auditable, consistent manner. Suddenly, we can not only definitively answer the question “What Vault roles are used by which repositories?” through native properties of our authentication configuration, but we are capable of asking - and answering - the more granular “in what scenarios can a repository access a Vault role?”

Beyond the question, “what secrets can team X’s project access?” we can enforce what different sets of secrets team X’s project can access during deployments, CI testing, and other use cases as a native property of our authentication scheme. And we can accomplish all of this without requiring developers to handle credentials to Vault themselves, without having to deal with static credential rotation lifecycles or exposure, with credential TTLs in the seconds or minutes, and with complete auditability designed into the configuration-as-code approach.

Developer Use Cases

As we discussed in our previous post on developer-first security, a developer-first security approach integrates into the organization’s existing development workflows. The first step is to document the workflows used by development teams. This is unique for every organization, but there are general patterns we can discuss. For DigitalOcean, we began with the following five use cases:

-

Testing pull requests - A continuous integration (CI) workflow testing pull requests in a repository needs to access nonproduction secrets.

-

Continuous deployment (CD) triggers - Pushes to the main branch trigger a continuous deployment workflow that builds a new version of the application and deploys it to production. This workflow needs access to production secrets.

-

Complex, multi-environment workflows - A single workflow that deploys first to a staging environment, verifies correct functionality, and then deploys the application to production should have access to staging and production secrets at each respective point inside the workflow, but should not be able to access both staging and production secrets at the same time.

-

Supports monorepos - Multiple teams contributing to a monorepo can define individual

.github/workflow/files inside the same repository and get access to their unique credentials that other teams and workflows inside the monorepo cannot access. -

Reusable & shareable workflows - An internal reusable workflow, such as a set of tasks encapsulating publishing artifacts to Artifactory, can access its needed secrets when called from any repository across multiple GitHub organizations. Consumers invoking the workflow do not need to configure anything unique for access to secrets.

The following security considerations apply to each developer use case:

-

Credentials must be short-lived. Compromise of any workflow must present an extremely minimal window of opportunity for a malicious entity to exploit these credentials.

-

Secrets consumption must be fully auditable - we must be able to determine what repository accessed what Vault role (and therefore consumed what secrets) at some specific time. We must also be able to determine what secrets could be consumed by a repository or workflow at any given time.

Configuring Vault for GitHub OIDC

Let’s step through how the OIDC configuration can be bound to Vault and how to provide the fine-grained customizability to match these developer and security use cases. These code examples will use Terraform.

Enabling a GitHub OIDC configuration on Vault’s end requires creating a new JWT auth backend pointing to GitHub.com or to a GitHub Enterprise Server instance. GitHub has documentation on how to construct the URL for a GitHub Enterprise Server.

resource "vault_jwt_auth_backend" "github_oidc" {

description = "Accept OIDC authentication from GitHub Action workflows"

path = "gha"

oidc_discovery_url = "https://token.actions.githubusercontent.com"

bound_issuer = "https://token.actions.githubusercontent.com"

}

At this point, Vault and GitHub are configured to talk to each other. What’s left is defining each use case as its own Vault role configuration on this authentication backend. This is the meat of the configuration and what to do depends on the needs of the developers in your organization.

An extra configuration step for GitHub Enterprise Cloud

Organizations configuring OIDC authentication from github.com should take an additional configuration step: switch to a unique token URL. Setting the bound_issuer and oidc_discovery_url to https://token.actions.githubusercontent.com grants the entirety of public GitHub the possibility of authenticating to your Vault server. If you accidentally misconfigure the bound claims that we describe below, you could be exposing your Vault server to other users on github.com.

To prevent this, GitHub has recently added an API-only configuration for organizations to customize your enterprise’s token URL to https://token.actions.githubusercontent.com/<enterpriseSlug>, where enterpriseSlug refers to the value that was set when your enterprise cloud account was created. We strongly recommend any enterprise cloud organizations using GitHub OIDC enable this setting. This way, no matter how the bound claims are configured below, it is not possible for other users or enterprises on github.com to get a valid OIDC token to your Vault server. Both the oidc_discovery_url and bound_issuer should use this new token URL.

resource "vault_jwt_auth_backend" "github_oidc" {

description = "Accept OIDC authentication from GitHub Action workflows"

path = "gha"

oidc_discovery_url = "https://token.actions.githubusercontent.com/mycompany"

bound_issuer = "https://token.actions.githubusercontent.com/mycompany"

}

This does not apply to GitHub Enterprise Server accounts, as the self-hosted instance is already unique to your enterprise.

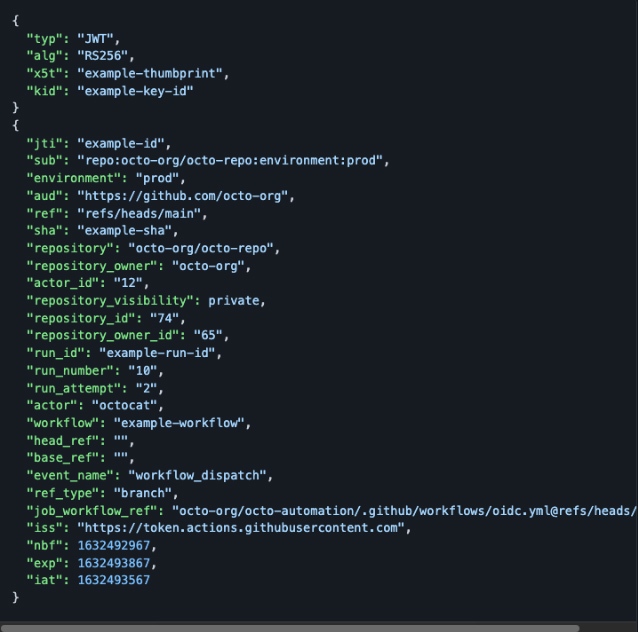

The GitHub JWT

The claims provided in GitHub’s JWT define our authentication configuration capabilities. We can bind any combination of these key-value pairs to a Vault role, thereby requiring all of that data to exist in a GitHub workflow’s JWT before granting access to a Vault role and its underlying policies. The following is an example GitHub JWT displaying the claims contained in a token:

Leveraging JWT Claims For Fine-Grained Access

The primary property we use at DigitalOcean is the bound subject (sub) claim, although simple use cases can use alternative JWT properties. For example, to allow one repository to access a certain Vault role while preventing other repositories from authenticating, we can bind the repository claim to a Vault role instead.

resource "vault_jwt_auth_backend_role" "github_oidc_role" {

role_name = "myrepo-myrole"

bound_claims = { repository = "digitalocean/myrepo" }

# Required configuration attributes

token_policies = ["default", "mypolicy"]

bound_audiences = ["https://github.com/digitalocean"]

role_type = "jwt"

backend = "gha"

user_claim = "actor"

token_type = "batch"

token_ttl = 300 # seconds

}

More commonly, however, we want finer-grained delineation, such as separating pull request workflows from a deployment workflow triggered from the main branch. For this, we can create two separate roles on Vault, each granting access to a respective development or production policy. The subject claim enables us to enforce these separate use cases:

resource "vault_jwt_auth_backend_role" "only_prs" {

role_name = "myrepo-prs"

bound_claims = { sub = "repo:digitalocean/myrepo:pull_request" }

# …

}

resource "vault_jwt_auth_backend_role" "only_main_branch" {

role-name = "myrepo-main"

bound_claims = { sub = "repo:digitalocean/myrepo:ref:refs/heads/main" }

# …

}

Workflows invoked inside of a pull request that attempt to receive an authentication token for the “myrepo-main” Vault role will fail, as the OIDC properties in the JWT will not match the preconfigured expectation in the bound_claims. Workflows from any event trigger that is not a pull_request, such as a push, will fail to authenticate to the “myrepo-prs” Vault role.

There are a number of ways to filter the subject claim. The options boil down to:

-

pull_request(but no other) workflow triggers -

some specific branch on the repository

-

some specific tag on the repository

-

some wildcard pattern for multiple branches or multiple tags (e.g.

ref:refs/tags/*) -

some GitHub Environment (or a wildcard pattern for multiple GitHub Environments, although we have not encountered a use case for this)

These five configurations give us almost all of the tools we need to solve the developer use cases we previously identified in this article. Combining other claims in the JWT with the bound subject (sub) give us everything we need. Crucially, the method developers use to consume secrets remains consistent across all of these use cases. HashiCorp maintains a GitHub Action for consumption of secrets in Action workflows. A developer includes the name of their desired role and what secrets they wish to access:

- uses: hashicorp/vault-action@v2

with:

role: "myrepo-prs"

secrets: |

secrets/data/their/chosen/secrets mysecret | MY_SECRET ;

# Necessary configuration parameters

url: "https://my-vault.company.com:8200"

caCertificate: "optional yet likely for an enterprise vault configuration"

method: "jwt"

path: "gha"

If the expected bound claims match a user’s workflow for the requested Vault role, they will be granted a short-lived token. Because we set the token_ttl on the Vault role configuration for 5 minutes, the Vault token granted to each workflow will expire after that time. This gives a malicious entity an extremely small window of time to exploit a valid auth token while providing plenty of time for a legitimate developer to retrieve the secrets their workflow requires. In 80% of cases we’ve found that a 60 second TTL is plenty of time. We recently bumped our default TTL from 60 seconds to 5 minutes to account for those other edge cases inside our organization. We will grant certain workflows up to a 30 minute TTL, but we have yet to find a use case that requires a Vault token for longer.

Solving The Developer Use Cases

Let’s see how an OIDC configuration can enable each of the five developer use cases we listed above.

Testing Pull Requests

Example: A continuous integration (CI) workflow testing pull requests in a repository needs to access nonproduction secrets.

This can be enforced via the pull_request bound subject mentioned previously. A complete example is:

resource "vault_jwt_auth_backend_role" "myrepo-nonprod-prs" {

role_name = "myrepo-nonprod-prs"

bound_claims = { sub = "repo:digitalocean/myrepo:pull_request" }

# Required configuration attributes

token_policies = ["default", vault_policy.myrepo-nonprod-prs.name]

bound_audiences = ["https://github.com/digitalocean"]

role_type = "jwt"

backend = "gha"

user_claim = "actor"

token_type = "batch"

token_ttl = 300 # seconds

}

data "vault_policy_document" "myrepo-nonprod-pr" {

rule {

path = "secret/data/myteam/myproject/development"

capabilities = ["read"]

}

}

resource "vault_policy" "myrepo-nonprod-prs" {

name = "myrepo-nonprod-prs-policy"

policy = data.vault_policy_document.myrepo-nonprod-prs.hcl

}

Continuous deployment (CD) triggers

Example: Pushes to the main branch trigger a continuous deployment workflow that builds a new version of the application and deploys it to production. This workflow needs access to production secrets.

Similarly, we can use the main branch bound subject construction provided earlier. A complete example of this configuration follows. Note the only material changes are to the role_name, bound_claims, and the contents of the policy this Vault role should be granted. The rest of the examples will focus on those values.

resource "vault_jwt_auth_backend_role" "myrepo-prod-branch-main" {

role_name = "myrepo-prod-branch-main"

bound_claims = { sub = "repo:digitalocean/myrepo:ref:refs/heads/main" }

# Required configuration attributes

token_policies = ["default", vault_policy.myrepo-prod-branch-main.name]

bound_audiences = ["https://github.com/digitalocean"]

role_type = "jwt"

backend = "gha"

user_claim = "actor"

token_ttl = 300 # seconds

token_type = "batch"

}

data "vault_policy_document "myrepo-prod-branch-main" {

rule {

path = "secret/data/myteam/myproject/production"

capabilities = ["read"]

}

}

resource "vault_policy" "myrepo-prod-branch-main" {

name = "myrepo-prod-branch-main-policy"

policy = data.vault_policy_document.myrepo-prod-branch-main.hcl

}

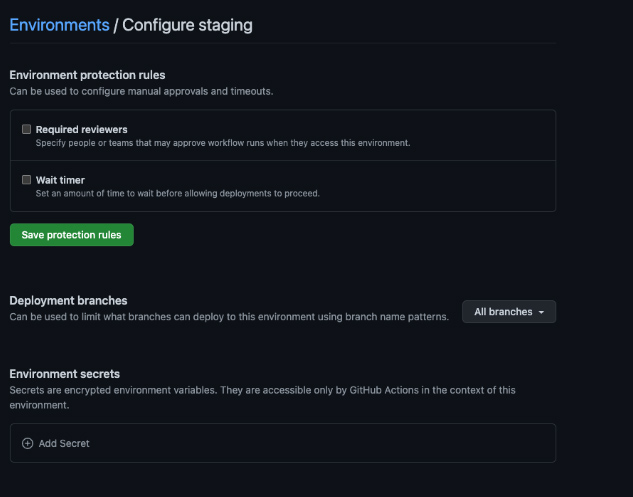

Complex, multi-environment workflows

Example: A single workflow that deploys first to a staging environment, verifies correct functionality, and then deploys the application to production should have access to staging and production secrets at each respective point inside the workflow, but should not be able to access both staging and production secrets at the same time.

This is a more complicated real-world use case. While there’s a bit more to configure on the GitHub side, the authentication to Vault remains consistent. As there are two sets of secrets involved here - staging secrets and production secrets - we want to create two corresponding Vault roles. But, the same workflow file will need both Vault roles. We need to enforce that at no point can an arbitrary task inside the workflow access both sets of secrets.

To accomplish this, we will use one of the other bound subject filtering options: GitHub Environments. Environments are an access control feature on GitHub repositories. For this use case, we don’t need to configure the environments in any way aside from ensuring they exist on our developer’s repository.

First, we need to create staging and production environments, leaving all of the other settings blank.

Second, we need to configure our two Vault roles, using an environment filter on the subject claim.

resource "vault_jwt_auth_backend_role" "myrepo-env-staging" {

role_name = "myrepo-env-staging"

bound_claims = { sub = "repo:digitalocean/myrepo:environment:staging" }

# rest of configuration

}

resource "vault_jwt_auth_backend_role" "myrepo-env-production" {

role_name = "myrepo-env-production"

bound_claims = { sub = "repo:digitalocean/myrepo:environment:production" }

# rest of configuration

}

This configuration means that a workflow job invoked under the staging GitHub Environment can retrieve an auth token for the myrepo-env-staging Vault role, while the production GitHub Environment can retrieve an auth token for the myrepo-env-production Vault role. Workflows not invoked under those environments will fail to authenticate to Vault, and since only one environment can be applied to a workflow job, neither environment can access the other environment’s secrets.

Third, we build our GitHub Actions workflow. To accomplish this use case of a continuous deployment pushing to staging, running some tests, then deploying to production, we can create two workflow jobs in which one job requires the other to have successfully completed. Each job is assigned its respective environment.

name: Continuous Deployment

on:

push:

branches:

- main

jobs:

deploy-staging:

name: Deploy and Test App on Staging

environment: staging

runs-on: ubuntu-latest

# These are the minimal permissions required if you want to use GitHub OIDC_

# https://docs.github.com/en/enterprise-server@latest/actions/deployment/security-hardening-your-deployments/about-security-hardening-with-openid-connect#adding-permissions-settings_

permissions:

contents: read

id-token: write

steps:

- uses: actions/checkout@v3

- name: Import Secrets

id: secrets

uses: hashicorp/vault-action@v2

with:

role: "myrepo-env-staging"

secrets: |

secrets/data/myteam/myproject/staging mysecret | MY_SECRET ;

# Rest of the configuration

url: "https://my-vault.company.com:8200"

caCertificate: "optional yet likely for an enterprise vault configuration"

method: "jwt"

path: "gha"

exportEnv: false

- name: Deploy something

run: # ...

env:

my_env_var: "${{ steps.secrets.outputs.MY_SECRET }}"

- name: Test something

run: # ...

deploy-production:

name: Deploy to Production

environment: production

# Production will only run if the staging job succeeds

needs:

- deploy-staging

runs-on: ubuntu-latest

permissions:

contents: read

id-token: write

steps:

- uses: actions/checkout@v3

- name: Import Secrets

id: secrets

uses: hashicorp/vault-action@v2

with:

role: "myrepo-env-production"

secrets: |

secrets/data/myteam/myproject/production mysecret | PROD_SECRET ;

# Rest of the configuration

url: "https://my-vault.company.com:8200"

caCertificate: "optional yet likely for an enterprise vault configuration"

method: "jwt"

path: "gha"

exportEnv: false

- name: Deploy something

run: # ...

env:

my_env_var: "${{ steps.secrets.outputs.PROD_SECRET }}"

We can additionally enforce that these environments can only authenticate from a specific workflow file using the technique for our next developer use case. That is, someone cannot create a new file in the repo, add the environment: production line, and access the production environment secrets from that other workflow.

Supports Monorepos

Example: Multiple teams contributing to a monorepo can define individual .github/workflow/files inside the same repository and get access to their unique credentials that other teams and workflows inside the monorepo cannot access.

To accomplish this, we combine two attributes for the bound_claims of this Vault role: sub and job_workflow_ref. As a reminder, we can combine any number of the GitHub JWT claims to a Vault role!

The job_workflow_ref is one of the other supported claims in GitHub’s JWT. Its format is organization/repo/<path to workflow file>@<repo ref>.

"job_workflow_ref": "octo-org/octo-automation/.github/workflows/oidc.yml@refs/heads/main"

The ref at the end of the string signifies the version of the workflow file that is being bound to this configuration and has to match where a workflow run is invoked. For example, if we were constructing a Vault role intended to be used in a production deployment from the main branch of a monorepo, setting @refs/heads/main on the job_workflow_ref means that the specified workflow triggered from the main branch - via most workflow triggers - will succeed, while workflows triggered from something like a pull request will fail, as the job_workflow_ref will end with something like @refs/pull/12345/merge.

resource "vault_jwt_auth_backend_role" "mymonorepo-myteam-myworkflow" {

role_name = "mymonorepo-myteam-myworkflow"

bound_claims = {

sub = "repo:digitalocean/myrepo:ref:refs/heads/main"

# Only accept the version of the workflow on the main branch

job_workflow_ref = "digitalocean/myrepo/.github/workflows/myteam-deployment.yml@refs/heads/main"

}

# rest of configuration

}

For a workflow in a monorepo that should run from any pull request - but should not expose secrets to any other workflow file - we can use a wildcard in our job_workflow_ref.

resource "vault_jwt_auth_backend_role" "mymonorepo-myteam-myworkflow" {

role_name = "mymonorepo-myteam-myworkflow"

bound_claims = {

sub = "repo:digitalocean/myrepo:pull_request"

job_workflow_ref = "digitalocean/myrepo/.github/workflows/myteam-deployment.yml@refs/pull/*"

}

# 'glob' makes Vault treat '*' as a wildcard instead of as a literal string

bound_claims_type = "glob"

# rest of configuration

}

The workflow file itself is constructed similarly to the previous examples. We recommend that teams working in a monorepo make liberal use of GitHub’s paths/paths-ignore filters so their workflows only trigger when necessary.

on:

push:

branches:

- main

paths:

- 'src/teams/myteam/**'

Reusable and shareable workflows

Example: An internal reusable workflow, such as a set of tasks encapsulating publishing artifacts to Artifactory, can access its needed secrets when called from any repository across multiple GitHub organizations. Consumers invoking the workflow do not need to configure anything unique for access to secrets.

Reusable workflows should also make use of sub and job_workflow_ref, however in this case we will add a wildcard to the bound subject. How exactly the subject should be constructed will depend on how widely you desire the reusable workflow to be used.

For example, within a GitHub Enterprise Server instance in which every GitHub organization belongs to the company, you could use sub = “repo:*” combined with a specific job_workflow_ref. Or replace the bound subject with a similar wildcard claim like repository = “*”. If, however, you want to grant widespread access within only one GitHub organization in your GitHub Enterprise Server (or you are on github.com, in which case you should restrict access to just your company’s organization), you can set a wildcard subject like sub = “repo:digitalocean/*”. Don’t forget to set bound_claims_type = “glob”!

Regardless of the bound subject, your job_workflow_ref should point to the reusable workflow you expect the organization to trigger. Certain claims in the JWT, such as workflow and ref, refer to the caller workflow, the repo whose workflow is invoking a reusable workflow. But job_workflow_ref refers to the called workflow, which is the workflow that is actually running (our reusable workflow). GitHub provides further information about how the JWT works with reusable workflows. To understand the distinction between caller and called workflows, we’ll use the following example:

Let’s say a platform engineering team sets up a reusable workflow to help deploy artifacts to Artifactory. They create this reusable workflow in the repository digitalocean/shared-workflows and the path to the reusable workflow file inside that repo is .github/workflows/artifactory.yml. A developer wants to consume this reusable workflow in their repo. They create a digitalocean/myproject repository, and create a .github/workflow/deploy.yml workflow file. The developer’s workflow file might look like:

name: Deploy to Artifactory

on:

release:

types:

- published

jobs:

deploy:

name: push to artifactory

runs-on: ubuntu-latest

permissions:

contents: read

id-token: write

steps:

- uses: actions@checkout@v3

- name: Deploy

uses: digitalocean/shared-workflows/.github/workflows/artifactory.yml@main

with:

inputs: "..."

Inside the reusable workflow, a hashicorp/vault-action step retrieves secrets using OIDC. Notably, the permissions block must be set on the developer’s workflow, while the secrets will be retrieved inside the reusable workflow.

When the developer’s workflow file is triggered, the caller workflow will be digitalocean/myproject/.github.workflows/deploy.yml@refs/… . The called workflow will be digitalocean/shared-workflows/.github/workflows/artifactory.yml@refs/… .

Therefore, the Vault role we want to construct, which the reusable workflow will use to retrieve its secrets, is:

resource "vault_jwt_auth_backend_role" "reusable-workflow" {

role_name = "reusable-workflow"

bound_claims = {

sub = "repo:digitalocean/*"

job_workflow_ref = "digitalocean/shared-workflows/.github/workflows/artifactory.yml@refs/heads/main"

}

bound_claims_type = "glob"

# rest of configuration

}

This allows any repository inside the digitalocean organization to access the Vault role reusable-workflow, but only from the called workflow, the reusable workflow, at digitalocean/shared-workflows/.github/workflows/artifactory.yml@refs/heads/main. We recommend such reusable workflow roles pin the ref of the job_workflow_ref to the reusable workflow’s default branch or to a specific tag (e.g. @refs/heads/main). This determines what version of the workflow file someone else can invoke to successfully retrieve a Vault role; all other versions of the artifactory.yml reusable workflow will fail to authenticate.

As a benefit of this construction, any team in the digitalocean organization can use this reusable workflow to push to Artifactory with credentials, but no team has access to the actual secrets in their workflows. They are retrieved and handled inside the reusable workflow, and the caller workflow cannot influence or extract any information from the called workflow that isn’t pre-configured.

Providing Developer-First Security Tooling

With all of these possibilities, however, come a plethora of opportunities to misconfigure a Vault role, resulting in frustratingly vague 400 errors when trying to authenticate to Vault (although that may be improved in recent hashicorp/vault-action versions). That’s not a great developer experience! Asking all of your developers to learn the intricacies of the GitHub JWT bound subject filtering conditions or the impacts of combining sub and job_workflow_ref, or other claims, will lead to a lot of pain. Our previous article emphasizes the importance of security initiatives solving problems for developers, not introducing them!

This is the point where the security team should, with a developer-first security mindset, invest in providing paved path tooling to solve the security concerns - use these least-privilege Vault roles - while solving the developer concern - let me get secrets and move on with my day! The internals of OIDC claim construction within Vault roles should be encapsulated through tooling that makes it easy for developers to get the right Vault role configuration for their use case. At DigitalOcean, the security team offers a command-line wizard to interactively walk a developer through the steps to create a Vault role for their workflow.

The wizard sets up the necessary configurations on both Vault and, if they need GitHub Environments, applies the necessary changes to their GitHub repository. Experienced users can generate configurations non-interactively as well. This not only provides a paved path for the secrets management that security cares about, but a solution that makes it easier for developers to deploy their engineering pipelines, inside of which secrets consumption is just a small part. Crucially, the wizard does not ask the developer if they want to do “secret task A” or “secret task B.” The developer is not expected to understand the intricacies involved in making that decision. Instead, the developer is asked which engineering task they want to perform, and the tooling guides them through the relevant security steps for that task.

The wizard also offers to create a pull request onto the developer’s repository with a “hello world” deployment workflow leveraging their specific Vault role in whatever authentication pattern they’ve requested.

name: Your Job

on:

push:

branches:

- main

jobs:

FROM_THE_MAIN_BRANCH:

runs-on:

- Linux # self-hosted runner

permissions:

contents: read

id-token: write

steps:

- name: Import Secrets

id: secrets

uses: do-actions/hashicorp-vault-action@v2

with:

jwtGithubAudience: 'product_security:secrets_example'

role: 'digitalocean-secrets-product_security-secrets_example-main'

secrets: |

secret/data/product_security/secrets_example your_secret | OUTPUT_VALUE_TO_REFERENCE ;

secret/data/product_security/secrets_example your_secret2 | OUTPUT_VALUE_TO_REFERENCE2 ;

- name: Your next steps

run: "echo 'Reference secrets in your steps like this: ${{ steps.secrets.outputs.OUTPUT_VALUE_TO_REFERENCE }}'"

The do-actions/hashicorp-vault-action role is an internal vendored version of hashicorp/vault-action with company defaults pre-configured, so developers do not need to set the Vault URL, CA certificate, authentication backend, and other common defaults for our environment.

Solving The Security Use Cases

In the previous workflow example, we are configuring the optional jwtGitHubAudience parameter on HashiCorp’s vault-action action. The reason for this is due to the security use case we defined above:

- Secrets consumption must be fully auditable - we must be able to determine what repository accessed what Vault role (and therefore consumed what secrets) at some specific time. We must also be able to determine what secrets could be consumed by which repositories or workflows at any given time.

There are a few ways to audit the behavior of workflows accessing Vault secrets through GitHub OIDC. The jwtGitHubAudience parameter in HashiCorp’s GitHub action lets us customize the value of the aud claim in the OIDC token. By default, the audience is the owner of the given repository on GitHub: the GitHub user or organization to which a repo belongs. For the repo https://github.com/digitalocean/myrepo, the aud claim is https://github.com/digitalocean (replace github.com with your GitHub Enterprise Server domain, if necessary). We have chosen to enforce an team:service format to audiences, so we can consistently attribute individual Vault roles and activity to the team and owning service that owns the secrets and usage of a given Vault role.

Default User Claim

Additionally, we’ve set the default user_claim to job_workflow_ref. GitHub’s examples typically use the actor claim; however, we believe that job_workflow_ref gives us more actionable information within an audit log entry than the actor claim.

The user claim is how you want Vault to uniquely identify a client and can be set to any claim present in the GitHub JWT. This value is used for the name of the identity entity alias upon a successful login. This essentially means that, in Vault’s audit log, the value of the user claim will be retrievable as auth.display_name.

Using job_workflow_ref allows us to attribute activity on Vault’s end to the specific GitHub repository and workflow file that triggered it, as well as the pull request, tagged release, or other activity tied to that Vault role session. The auth.display_name syntax is [auth backend mount name]-[chosen user claim]. In the above screenshot, our JWT backend is configured at the auth mount github-actions and the rest is the value of the job_workflow_ref for this audit log entry.

We use job_workflow_ref instead of actor because the actor claim reflects the github.actor field in the github context and corresponds to the username who triggered a workflow run. However, re-running a workflow will re-use the original actor that kicked off the initial workflow run, even if that is not the current actor that just triggered the re-run.

In recent months, GitHub has added a new github.triggering_actor field to the github context to differentiate between the initial executing actor and whoever triggered a re-run of a workflow. However, the GitHub JWT’s actor claim currently always refers to the github.actor context attribute. Therefore, we prefer to set the default user claim to job_workflow_ref and correlate workflow runs specified by that ref with GitHub audit logs if we are interested in identifying an actor. With an example like the image above, however, we can also go to the pull request in question and look at the activity. We believe the job_workflow_ref gives us more actionable information on an audit log entry than other user claims.

resource "vault_jwt_auth_backend_role" "only_main_branch" {

# …

user_claim = "job_workflow_ref"

role-name = "myrepo-main"

bound_claims = { sub = "repo:digitalocean/myrepo:ref:refs/heads/main" }

}

Additional Cost Considerations for Vault Enterprise Customers

Vault Enterprise customers should be aware that configuring the user_claim can have a significant impact on generated client entities, which in turn may substantially inflate your bill. HashiCorp bills Vault usage, in part, by the number of unique client entities connecting to Vault within a certain period of time. For Vault HCP, this is on a monthly basis. For self-hosted Vault Enterprise, however, this is on an annual basis. Using the job_worklow_ref for the user_claim results in ephemeral, one-time use clients for every GitHub Action workflow invocation that runs. When we first configured this setting, our client count increased by over 10x in just one month. Given the annual license attribution, every month we ran this method would result in exponentially greater total client counts, as all ephemeral one-time use leases generated through this method would permanently apply to the annual count. Other OIDC-based authentication methods to Vault do not count ephemeral leases as permanent clients, but a side effect of the requirements for configuring this method through the JWT auth engine for GitHub results in this impact.

While the same number of JWTs will be generated on Vault regardless, we recommend customers on Vault Enterprise set the user_claim to actor. As noted above, this removes non-repudiation guarantees and collapses user_claim audit logging into an uninformative value for investigation, but it does not generate an inordinate client count.

Note that this limitation does not exist on Vault Community edition, and we recommend Vault users leveraging Community apply job_workflow_ref as the user_claim to benefit from the audit transparency.

Short-Lived TTLs

And as for our other security use case:

- Credentials must be short-lived. Compromise of any workflow must present an extremely minimal window of opportunity for a malicious entity to exploit these credentials.

As mentioned previously, we set the Vault token TTL to five minutes by default:

resource "vault_jwt_auth_backend_role" "only_main_branch" {

# …

token_ttl = 300 # seconds

token_type = "batch"

role-name = "myrepo-main"

bound_claims = { sub = "repo:digitalocean/myrepo:ref:refs/heads/main" }

}

A compromise of our CI/CD environment in which an attacker extracts the local environment or secrets present in a workflow will find they have only 5 minutes to leverage the granted Vault token before it becomes useless to them. If GitHub Actions follows in the path of other CI/CD providers in recent years and our workflows become compromised via their platform, any malicious activity within the 5-minute validity period of a Vault token could be traced in our logs to a specific job_workflow_ref and therefore all Vault activity connected to their behavior, without needing to sift through loads of legitimate traffic.

Since we use batch tokens for this OIDC use case, it is extremely cheap to generate thousands of auth tokens on Vault and these tokens cannot be renewed beyond the initial TTL set on the token. We lose the capability to revoke or list these tokens, but they live for such a short time period that this is not a concern to us.

There are additional token attributes that could be set on the Vault role configuration, but we do not currently use them today. We set the token_ttl default to a short time period and allow teams to customize that if they have a use case. Responses in the Vault audit log include the field auth.token_ttl so we can observe any unexpectedly long TTLs and investigate further.

Open Sourced Terraform Module

We have open sourced a Terraform module to assist organizations with configuring GitHub OIDC authentication to Vault with the fine-grained role claims setup discussed in this article. Organizations can define the audience, desired Vault role name, bound subject, and any additional claims they desire and the module will hook everything up on Vault.

module "github-vault-oidc" {

source = "digitalocean/github-oidc/vault"

version = "~> 2.1.0"

oidc_bindings = [

{

audience : "https://github.com/artis3n",

vault_role_name : "oidc-dev-role",

bound_subject : "repo:artis3n/github-oidc-vault-example:pull_request",

vault_policies : [

vault_policy.dev.name,

],

},

{

audience : "https://github.com/artis3n",

vault_role_name : "oidc-deploy-role",

bound_subject : "repo:artis3n/github-oidc-vault-example:ref:refs/heads/main",

vault_policies : [

vault_policy.deployment.name,

],

},

]

}

data "vault_policy_document" "dev" {

rule {

path = "secret/data/dev/foo"

capabilities = ["read"]

}

}

resource "vault_policy" "dev" {

name = "oidc-dev"

policy = data.vault_policy_document.dev.hcl

}

data "vault_policy_document" "deployment" {

rule {

path = "secret/data/prod/bar"

capabilities = ["read"]

}

}

resource "vault_policy" "deployment" {

name = "oidc-deploy"

policy = data.vault_policy_document.deployment.hcl

}

To assist organizations with managing these Vault roles at scale, we support a JSON files construction in which individual development teams can be empowered via CODEOWNER-ship of their own Vault roles and the module will import all the files and create the requested Vault roles. Or, Vault operators can leverage the JSON files for better organization of their GitHub OIDC Vault roles.

We are working on building a generic version of our wizard to help users create the appropriate oidc_bindings objects for their desired use cases, which we will include in this repository in the future.

Wrapping Up

In this article, we discussed DigitalOcean’s approach to securing CI/CD through GitHub Actions, OIDC, and HashiCorp Vault. We showed you five examples of real-world developer use cases to help build a mental model you can apply to your organization, and we showed you how we’re living our mission to build developer-first approaches to security and secrets management with our paved path secrets wizard.

If you would like to explore setting up GitHub OIDC Vault roles in a hands-on course following the first three developer use cases from this article, check out this GitHub Skills course.

We hope this was enlightening and will help you more easily apply safe secrets management concepts to your organization. We plan to share more details about our developer-first security tooling and approach in future articles.

Ari Kalfus is the Manager of Product Security at DigitalOcean. The Product Security team are internal advisors focused on enabling the business to safely innovate and experiment with risks. The team guides secure architecture design and reduces risk in the organization by constructing guardrails and paved paths that empower engineers to make informed security decisions.

Share

Try DigitalOcean for free

Click below to sign up and get $200 of credit to try our products over 60 days!Sign upRelated Articles

Contextual Vulnerability Management With Security Risk As Debt

- August 12, 2024

- 13 min read

Regresshion vulnerability: Recommended actions and steps we've taken

- July 2, 2024

- 7 min read

DigitalOcean and HIPAA: Enabling Healthcare Innovation on our Platform

- July 1, 2024

- 2 min read